Generative AI Product Problems #5: Security

What are the security risks of deploying LLMs to production and what can you do to stay prepared?

What are the security risks of deploying LLMs to production and what can you do to stay prepared?

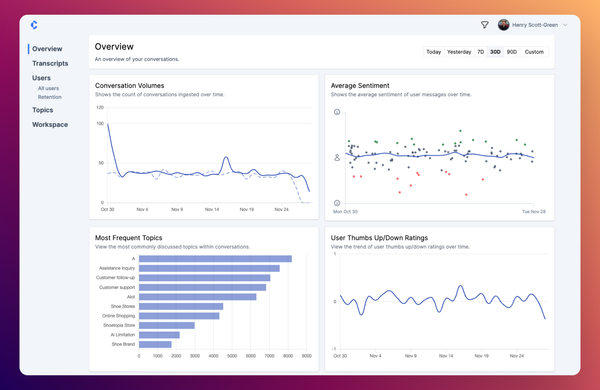

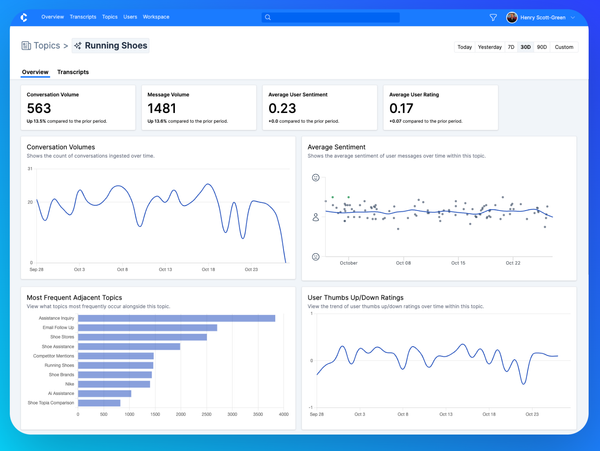

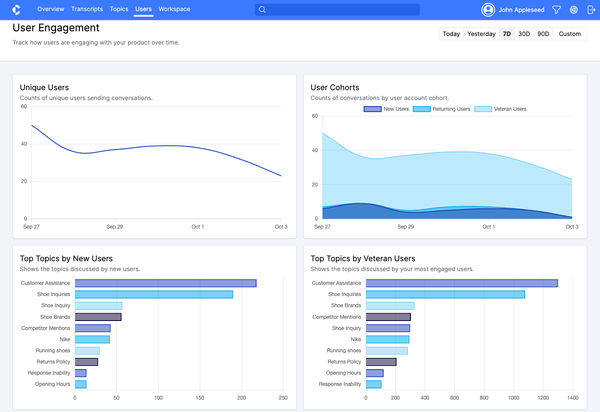

We’ve shipped evaluations, a product redesign, embedded analytics via API, user retention analytics, alerts for areas of poor performance, a new ingestion model, manual ratings, and many smaller improvements.

We've overhauled the Topics page, added pages for you to track individual users, and a whole lot more this month.

Don't let your LLM become a liability. Proactively monitor for toxic behavior to keep your AI respectful.

Intelligence isn't everything. Sometimes, LLMs are just too slow for good user experiences.

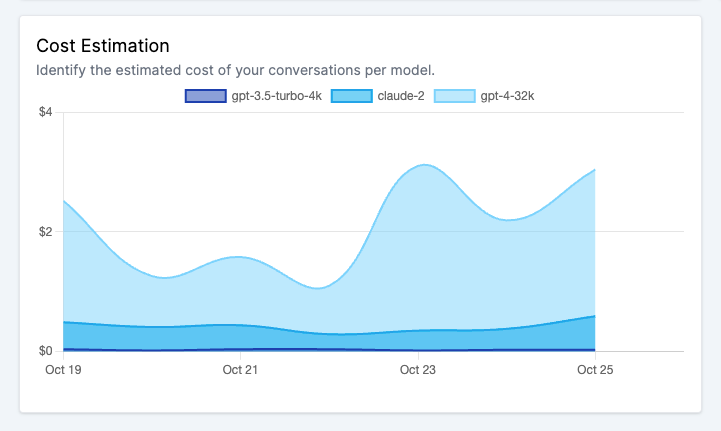

LLMs are a great way to cut costs. But they're also an easy way to blow your budget.

LLMs are notoriously fickle and prone to making up information.

Retrieval-augmented generation bridges the gap between LLMs and your private knowledge base. In this post, we’ll introduce RAG and discuss how to know if it’s the right strategy for your AI app.

Prompt engineering isn’t just for engineers. In this piece, we’ll apply a product lens to prompt engineering. We’ll review fundamental prompting techniques and the importance of iterating on prompts according to user feedback.

AI isn’t just a frontier for engineers. It also presents complex challenges for product leaders. In this post, we’ll walk through the AI product development cycle and show you how to improve user experiences even when they’re highly personalized and rarely identical.

Check out the latest features including the new Workspace and Users pages, expanded analytics capabilities, and PII filtering for protecting sensitive data.

Startups need to be 10x better than the competition to disrupt a market. With generative AI being a 10x technology in many ways, let’s explore how AI-native startups are using it to create outsized value.