Product Update | November 2023

We’ve shipped evaluations, a product redesign, embedded analytics via API, user retention analytics, alerts for areas of poor performance, a new ingestion model, manual ratings, and many smaller improvements.

It’s been another busy month at Context.ai! We’ve shipped evaluations (click here to be enabled), a product redesign, embedded analytics via API, user retention analytics, alerts for areas of poor performance, a new ingestion model, manual ratings, and many smaller improvements. We’re also now SOC2 Type 2 compliant, and we launched a fun side project - CodebaseChat.com

Evaluations

Our biggest launch this month addresses a pain point we’ve heard from customers consistently over the past months - evaluations. Developers of LLM applications regularly make changes to improve performance, including improving prompts, RAG systems, or fine-tuned models. Evaluating the performance impact of these changes before launching them to read users is hard, and often relies on manual inspection, vibes, and many manually-run scripts.

We now have a better way! Evals by Context.ai allows developers to run sets of test cases through their application, assessing performance with both global evaluators (such as does the model apologize and fail to answer the question), and per-query evaluators (assessing if the output matches custom input-specific criteria).

This pairs well with our post-launch analytics products, allowing builders to first identify areas of weak performance using analytics, then to prove the impact of fixes - first pre-launch, with our evaluators, and then post-launch, with our analytics. Please reach out to us to be enabled for this new feature!

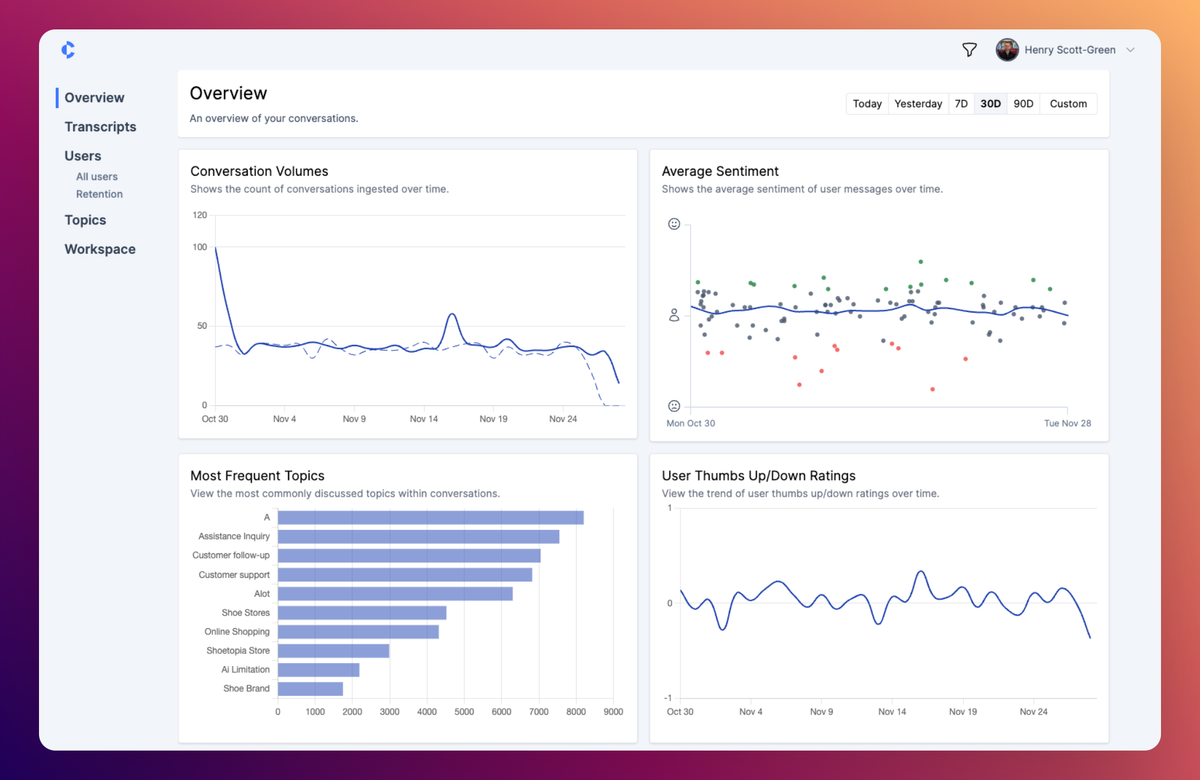

Product Redesign

Context.ai has a new and improved look! We’ve shifted our top-level navigation to the left of the application, and made a lot of UX tweaks throughout the product. Let us know any feedback!

User Retention Analytics

We now report user retention metrics that let you track what proportion of your users are repeatedly engaging with your application. We show a day-by-day 30-day retention curve, and a weekly cohort retention chart. This works out the box for every customer sending user_ids in their transcripts.

Embedded API

You can now access the results of our analytics (sentiment analysis, topical clustering, LLM cost estimation, and more) via API, so you can embed our analytics in your application.

With multi-tenant support, the API lets you seamlessly surface analytics to each of your end users. Read our API documentation to get started.

Poor Performance Alerts

Automatic alerts for areas of poor performance are the fastest way to know what’s going on with your LLM app.

Go to the main dashboard and you’ll now see actionable suggestions highlighting underperforming areas of user behavior. Click Investigate to dig deeper, so you can improve your product and turn around your metrics.

Threads API Support

OpenAI recently announced threading as part of their new Assistants API. Instead of having to pass previous messages in a context window every time you use an LLM, you can simply append new messages to a thread.

It’s just as easy to log messages to Context.ai for your product analytics. Use a consistent ID for each thread you want to log, and new messages will automatically be added to the right conversation threads. Click here for the documentation.

Manual Rating

You can now manually apply ratings to transcripts in the Context.ai platform. This follows last month’s launch of manual labels, and allows you to manually review transcripts for performance, and highlight problem areas for team members to investigate.

SOC2 Type 2

From day one, we’ve been committed to security and privacy. Now, that’s been verified by an independent audit.

We’ve worked hard to earn and maintain the trust of our customers, both enterprises and startups alike. Securely managing conversation data and other sensitive information is a responsibility we take seriously.

Earlier this year, we received our SOC 2 Type I certification. We’re proud to have gone a step further with our Type II certification, which demonstrates our compliance with the AICPA’s Trust Services Criteria over an extended period of time.

As we continue to build the analytics platform for LLM applications, security and privacy will always be top priorities.

CodebaseChat

CodebaseChat is a fun side project that lets anyone create a GPT chatbot from a GitHub repo, in just 30 seconds.

This helps you quickly onboard to new codebases, understand system design, and learn how things work if you're less technical.

Navigate to CodebaseChat.com and enter any GitHub repo link. You’ll receive a file you can upload to OpenAI to create a custom GPT chatbot for your codebase—all in 30 seconds. It’s 100% free and open source.

Smaller changes

- Significant performance improvements throughout the app mean page load times have dramatically reduced

- Improvements to suggested topic detection, resulting in more distinct topic suggestions

- Custom topics can now match a single topic against multiple matching strings

Feedback?

We’re iterating quickly and always looking for input from our users. Feel free to reach out with suggestions or feedback so we can improve the product!