Generative AI Product Problems #2: Cost

LLMs are a great way to cut costs. But they're also an easy way to blow your budget.

A picture’s worth 1,000 words. But how much is 1,000 words worth? Well, if GPT-4 generates them, then about $0.16.

It’s easy to think of AI as a way to cut costs, and it often is, but you have to do the math. $0.16 for 1,000 words doesn’t sound like much… until you multiply that cost over millions of tasks at enterprise scale.

There’s more than just the cost of generating outputs with LLMs. Whether you’re giving inputs to a model, generating images, or transcribing audio, there’s a price for everything.

So how do you do “the math”?

It comes down to return on investment. That’s rarely easy to measure, though.

Returns come in many forms—user retention, higher engagement, increased sales. Try to map these to a dollar value to justify your spend.

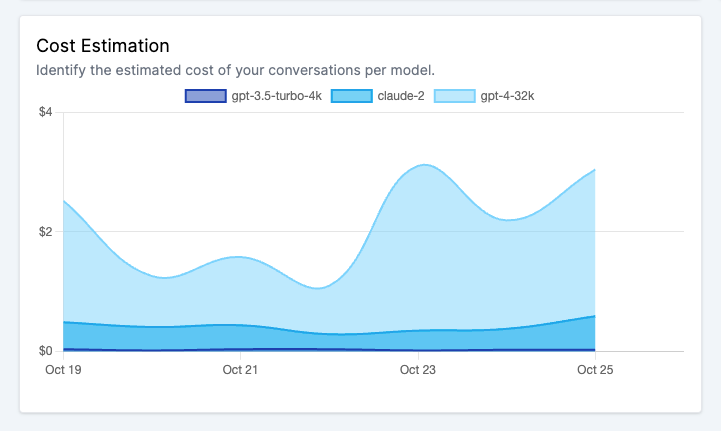

Investments can be tricky to measure, too. Every model’s pricing is different, and costs can be hard to predict. Revisit your historical data for the best estimates.

It’s not an all-or-nothing decision. Calculate the incremental ROI from making different tradeoffs. Could you swap in a different LLM or use a shorter context window? You just might achieve 90% of the quality for 10% of the cost.

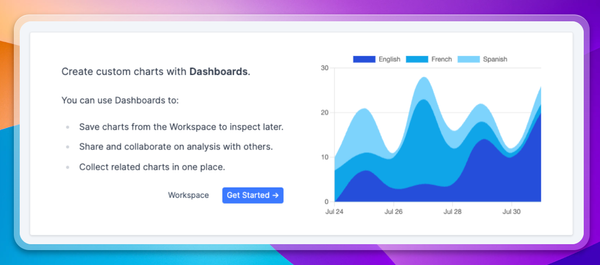

At Context.ai, we’re building the analytics solution so you can calculate your LLM’s ROI: everything from estimating spend to measuring user value. To learn more, request a demo at context.ai/demo.