The Ultimate Guide to LLM Product Evaluation

Large Language Models are incredibly impressive, and the number of products with LLM-based features is growing exponentially

But the excitement of launching an LLM product is often followed by important questions: how well is it working? Are my changes improving it? What follows are usually rudimentary, home-grown evaluations (or evals) that are used ad-hoc. These “vibe evals” are often manual, so they become complicated to run and don’t give a thorough assessment. And that’s if they’re run at all.

This experience is the norm: running LLM evals is hard, for a few reasons.

Evaluating an LLM product’s quality is unlike tasks that have come before. When evaluating the correctness of deterministic applications, or even non-LLM machine learning based applications, robust tooling and best practices exist. Knowing the set of acceptable responses, and how to evaluate them, is achievable using industry playbooks. And while that is slowly becoming the case for LLM applications, it is still early days.

So, if you are on your own evals journey and want to figure out how to get it right - without reinventing the wheel - then you're in the right place. In this post, we’ll get a lay of the land in the LLM evaluations space, and provide best practices for running LLM product evals, including the key considerations for implementing them in your organization.

Navigating LLM Evaluations

The first step in understanding the LLM evals space is clarifying the overloaded concept of LLM evals. LLM evals actually resolve into one of two buckets: model evals and product evals. The distinction isn’t just academic; it has serious implications on whether you’re applying the right kinds of evals to your product.

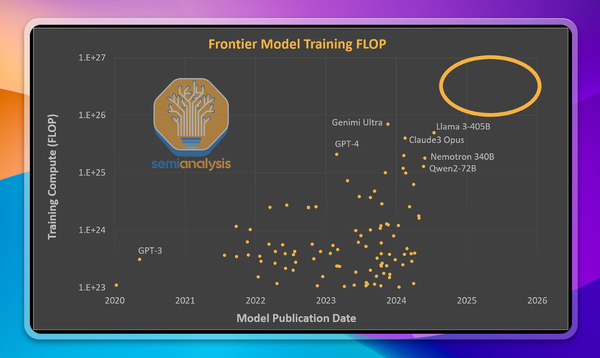

Model Evaluations: Primarily the Realm of LLM Providers

Model evaluations are completed by organizations building foundation models, like OpenAI, Google, and other LLM providers, and are meant to evaluate the quality of the models themselves.

That being said, these evals have a place in the decision-making process of an organization that isn’t creating a foundation model. It’s just a smaller, limited one: figuring out which foundation model is right for your use case. Some examples of model evals include TruthfulQA, an eval for measuring model responses’ truthfulness, and MMLU, a measure of how well the LLM can multitask. HuggingFace even hosts a leaderboard comparing open-source LLMs across these metrics, alongside many others. But overreliance on academic benchmarks can be dangerous for production use cases for a few reasons:

Academic Benchmarks are Limited (on purpose): While valuable for comparing base models, they do not necessarily translate to effectiveness in diverse, real-world applications. For example, a real world application will likely use Retrieval-Augmented Generation (RAG) or multiple-call chains, which benchmarks do not. This gap between benchmark and real world workflows can lead to a skewed understanding of a model's potential.

Optimization for Benchmarks: A natural consequence of the reliance on benchmarks is the inclination for model builders to tune their models to excel in those same benchmarks. In some cases, the benchmarks themselves may even be part of the training dataset for the model, making it unclear if performance on these benchmarks is generalization (good) or memorization (bad). Additionally, creating a benchmark that actually measures generalization is quite hard, so a lot of existing benchmarks likely don't. That means the scores they produce are even less useful as a way for organizations to assess model quality in real world use cases.

Product Evaluations: Assessing LLMs in the Wild

In contrast, LLM product evals aim to understand the performance of LLMs that are integrated into applications as a whole. Understanding and tuning the performance of these systems, rather than the models that underpin a single (albeit, important) piece of them, is where most organizations will be spending the majority of their time. As an organization building products for users, rather than building models for product builders, this is likely what you care about, too.

It makes sense for organizations to spend time here: it’s the part of the LLM application stack where they are directly creating value. But the space that LLM product evaluation metrics have to cover is huge. It ranges from RAG metrics (i.e., the relevance of retrieved documents) to hallucinations, question-answering accuracy, toxicity, and more. Additionally, they avoid many academic benchmark traps, since real-world performance of LLM-based applications often hinges on factors that academic benchmarks are ill-equipped to assess, like handling edge cases and having robust guardrails against inappropriate content in the product, not just the model.

How Should You Run Product Evals?

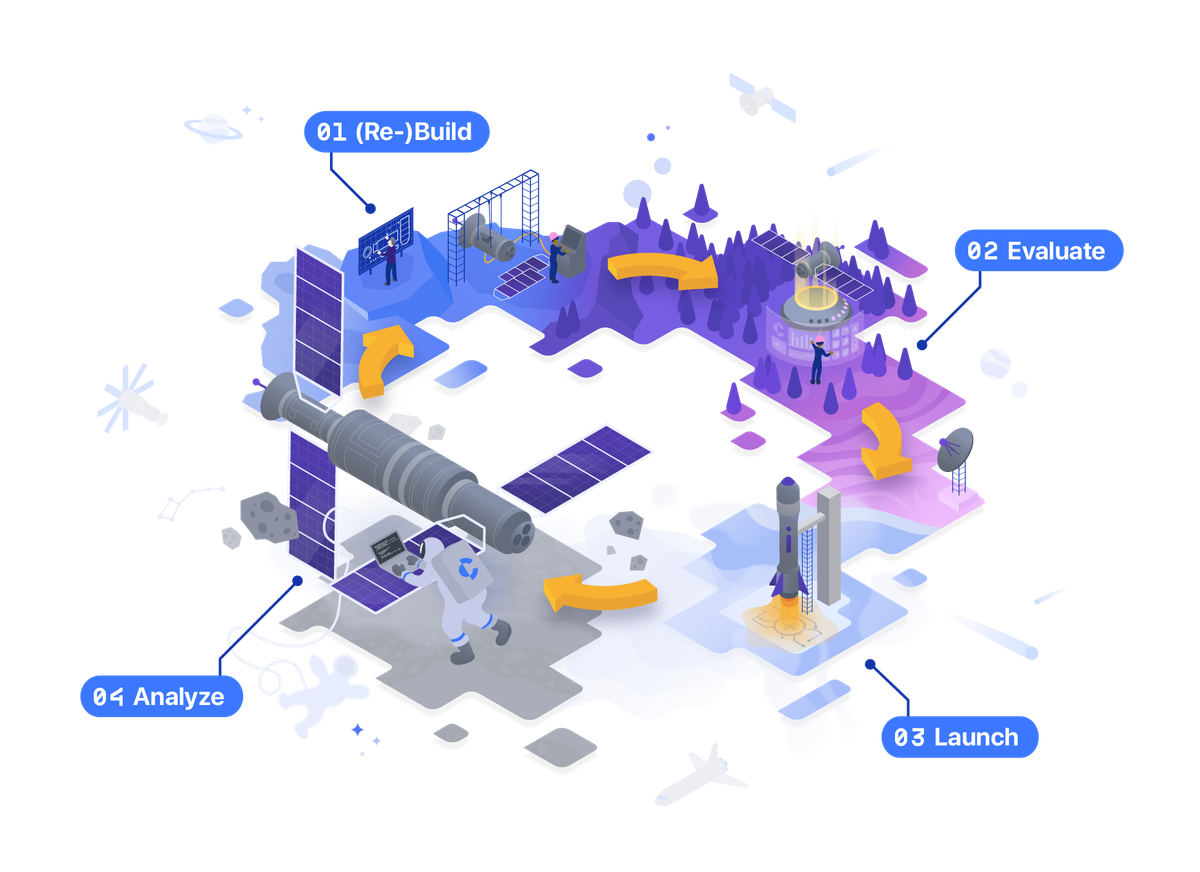

Once you understand the role of model and product evals in the development of your LLM application, the next step is defining test inputs, establishing evaluation criteria, and defining ground truths. Here’s how you can approach these steps to ensure your LLM application not only meets but exceeds expectations:

Define a Broad Range of Representative Test Inputs

The foundation of a robust evaluation framework is a comprehensive set of test inputs. These test inputs do a lot of heavy lifting: ideally, they will represent the diversity of real-world scenarios your application will encounter. There are a few approaches you can take here:

- Sampling past real user inputs: If your application is live, historical user data is a goldmine of representative test cases. Analyzing this data can help you understand common queries, frequent use cases, and edge cases that have previously challenged your application.

- Incorporating typical usage and adversarial inputs: Beyond looking at common interactions, it’s vital to test how your application handles adversarial inputs. These are scenarios that test the limits of your produce, including attempts to provoke errors, toxic or otherwise.

- Generating synthetic inputs: The powerful idea here is that synthetic data, generated by an LLM and based on some human-curated or real past inputs, can be used to bootstrap the set of test inputs. This is especially useful if the set of real past inputs is small or nonexistent.

In your evaluation system it will probably make sense to have a mix of these options, and to use some more heavily than others depending on the maturity of your application. For example, a brand new application may index more heavily on just synthetic data based on some curated, human crafted data. However, an application that has been getting usage can use this past data, and synthetic data that extends it, to create reasonable coverage.

Establish Global Evaluation Criteria

With a diverse set of test inputs in hand, you’ll need clear and measurable criteria for evaluation. While some criteria will depend on the specific inputs being tested (and we’ll get to those later), it makes sense to start with global criteria, which are requirements for responses that are true across all test cases. These can include:

- Hallucination: where the LLM states something as fact that is verifiably not true.

- Response refusal: where the LLM should produce a result, but refuses, often while apologizing.

- Brand voice alignment: ensuring the LLM never produces text content that is misaligned with desired attributes of a brand voice (tone, friendliness, clarity, etc.).

These criteria, and others like them, are run over every query, since they are criteria that are relevant in any test case. The distinction of global evaluation criteria comes from the fact that the pass criteria for the eval is defined once over all test cases rather than pass criteria that are specific to an individual input.

Define Per-Query Ground Truth Answers

To evaluate the response of an LLM application, you often need something to evaluate against. For many, defining per-query ground truth answers for each test case is essential. This is especially true for use cases involving factual queries, or specific advice over a known knowledge base, where ground truth lets you measure a model’s accuracy directly.

What Kinds of Evaluators Should You Run, Specifically?

Once you have your criteria and metrics, you’re ready to set up the evaluation. But how should you actually run the eval? Manually with a reviewer? Programatically? And if so, how? There's a wide spectrum of methods available, each offering different insights, benefits, and tradeoffs, which we’ll get into next.

Evaluating Responses: The Hierarchy of Methods

Evaluating responses has a hierarchy of methodologies that generally trade quality for scalability. Taking a look at each type of evaluator shows the nuanced advantages and disadvantages of each:

- Manual assessment by a reviewer: This involves human evaluators reviewing and assessing the responses generated by the LLM. Manual assessments can be invaluable for their ability to catch nuances, and to grade qualitative criteria and context that automated methods might miss. But they can also be extremely time-consuming, and scale poorly for large datasets.

- LLM evals: Using another LLM to evaluate the responses of your LLM application can offer insights from a different model's perspective. This method can help identify inconsistencies or errors that might not be apparent to human reviewers or specific automated tests, yet scale significantly better than human based assessments. It also has the advantage of being able to grade more qualitative criteria of a response. Again, while this approach might inspire skepticism at first, it's akin to humans judging the work of other humans (think: performance reviews, tests) that are widely accepted, and the research bears out that this approach works for LLMs, too.

- Statistical scorers: These scorers typically work by extracting a statistical measure of overlap between the response and target text. They are highly reliable (since they’re based on deterministic statistical measures), but they can be less accurate, due to their inability to reason about nuanced responses, the way an LLM or human evaluator could. That said, they can still be used effectively for evaluating against golden responses, where the pass / fail criteria are not binary. Some examples, in increasing order of lenience, include:

- Exact matching: how much of the exact golden response is present in the LLM response.

- Substring match: how many substrings in the LLM response match the golden response.

- Levenshtien distance: functionally, the number of single character edits to transform the LLM response to the golden response.

- Semantic similarity matching: how close are the semantic meanings of the golden response and LLM response, as measured by the inner product of their respective embedding vectors, or another measure.

- LLM semantic equivalent matching: similar to semantic similarity matching (above), where an LLM makes the judgment call on whether the LLM produced response and golden response are sufficiently similar.

Some Specific LLM Evals You Should Consider

Beyond the basics, there are some specific LLM eval metrics that provide significant value, and shouldn’t be overlooked:

- Faithfulness: This metric assesses whether every statement in the response is faithful to the source data, catching instances where the model might be “hallucinating”, or fabricating data and presenting it as true. This ensures the reliability of the information provided, which is crucial for maintaining user trust.

- Retrieval quality: For applications that involve retrieving information from a dataset, the quality of the retrieved context is key. This metric looks at whether the context retrieved accurately addresses the user's input, providing a measure of the RAG system's retrieval stage.

Key Considerations for Your Evaluation System

At this point, you have everything in place: you know the role of model vs application evals, how to get the right test data, and what kind of evals to run and the tradeoffs inherent in each. But when the proverbial rubber hits the road of actually running the evals, end to end, there are a number of considerations that come into play. We’ll quickly walk through the most important, along with how to address them.

Time Costs

As described above, some of the choices you make (i.e., human evaluators, LLM vs statistical evals, etc.) can significantly impact the time costs of running your evaluation. However, the actual mechanics of how you implemented the methods described above can also have an enormous impact. Here are some tips:

- Aim for efficiency in execution: Running evaluation scripts and manually recording results can be both time-consuming and prone to error. Automating these processes as much as possible reduces the overhead and allows developers and evaluators to focus on interpreting the results rather than on the mechanics of obtaining them.

- Streamline workflows: Incorporating tools and platforms that facilitate automated data capture and analysis can significantly reduce the time costs associated with evaluations. This includes using software that integrates evaluation metrics directly into your development environment.

Ad-hoc Testing vs. Systematic Assessment

As an organization rolls out LLM-enabled functionality, testing often starts off as ad-hoc, which makes sense as a start! But as the application scales in terms of maturity, users, and importance, ad-hoc approaches become tech debt that you should urgently address, for a few reasons:

- Achieving consistency in test cases: Relying on ad-hoc testing, where different test cases are used sporadically, can lead to inconsistent and unreliable assessment of your application’s performance over time. Establishing a broad and consistently used test set for evaluations ensures that you cover both success and failure scenarios effectively.

- Structure your evaluation frameworks: Developing a structured approach to testing, with a predefined set of test cases and criteria, helps in maintaining a consistent quality benchmark across different releases and iterations of your application.

Coverage and Continuous Monitoring

A healthy Continuous Integration/Continuous Deployment (CI/CD) workflow is one of the holy grails of modern DevOps, and for good reason: it has a lot of benefits. To bring ensure a continuously healthy LLM application you’ll need to:

- Generate comprehensive test sets: Ensuring that your test set covers a wide range of scenarios, including edge cases, allows for a more thorough understanding of your application's capabilities and limitations. This comprehensive coverage is crucial for identifying areas for improvement and ensuring robust performance.

- Integrate with CI/CD: Incorporating evaluation processes into your CI/CD pipeline enables ongoing monitoring and regression detection, so you can promptly identify and address issues as they arise during development.

- Online monitoring: Running evaluators on production traffic enables real-time monitoring of your application’s performance. This approach allows for the assessment of how updates or changes affect user experience and application reliability in a live environment.

Use Analytics and Real User Feedback

Evaluations in development and pre-production environments are only half the battle: they help you feel confident that you’re shipping something meaningful to your users. But the only way to know that you are is with effective analytics and feedback from real users. Some best practices here:

- Combine analytics with evaluations: Integrating analytics with evaluations offers a complete picture of your application's performance. This combination provides insights into how users interact with your application and how those interactions align with the evaluation outcomes.

- Create strong feedback loops: Incorporating user feedback into the evaluation process ensures that the application not only meets technical benchmarks but also addresses the needs and expectations of users. User feedback can highlight issues that may not be evident through automated evaluations alone.

Balancing Automated and Manual Evaluations

When done correctly, you can reap the benefits of both manual and automated evaluations. The challenge is how you think about the breakdown, and what tools you enlist to help:

- Automated vs. manual: While automated evaluations provide scalability and consistency, manual assessments by reviewers are invaluable for their nuanced understanding of context and user intent – it’s worth using a mix of both, where manual evaluations are only used for a small subset of difficult questions.

- Tooling and automation: Tools that facilitate both automated evaluation and manual review processes can greatly enhance the efficiency and effectiveness of your evaluations. This includes software that supports annotation, comparison, and analysis of evaluation results.

Conclusion

Thanks for making it to the end! This was a long one, and we covered a lot of ground, from the role of model vs application evals to the practical nuances of how to run LLM application evals in your organization. These evals are a critical part of understanding how your product performs, before it goes out to users. But more importantly, they allow you to measure the impact of changes you make.

But delivering robust evals isn’t easy. It requires a broad and representative set of test inputs, effective global and per-query evaluation criteria, and a mix of automated and manual assessments. In implementation, it requires considerations for time efficiency, enabling systematic assessment, ensuring comprehensive coverage, integrating with CI/CD, enabling online monitoring, and leveraging analytics and feedback.

If this guide to LLM product evals resonated, then you'll know that adopting the right tools will supercharge your journey from running ad-hoc scripts to being an LLM evals hero. Here at Context.ai, we’re building the LLM product evals and analytics stack that enables builders to develop LLM applications that delight their users. You can visit context.ai or reach out at henry@context.ai to learn more.