Product Update | May 2024

Are you desperate to understand how people are using your LLM product, but don’t want to share your transcripts with a third party for analysis?

Good news! We now support self-hosted deployments for LLM user analytics. This allows you to run the Context.ai product within your own cloud instance without data leaving your control. Get in touch to learn more!

This month we’ve additionally improved transcript categorization to be significantly more accurate, and we support projects to allow separation of multiple LLM products within one team or company

Self-hosted deployment

Self-hosted deployment has long been requested by the most privacy and security conscious enterprises - and we now support it.

Deployment is available via Docker image for our first beta group of customers, please get in touch if you’d like to be included in the next wave!

Improved transcript categorisation

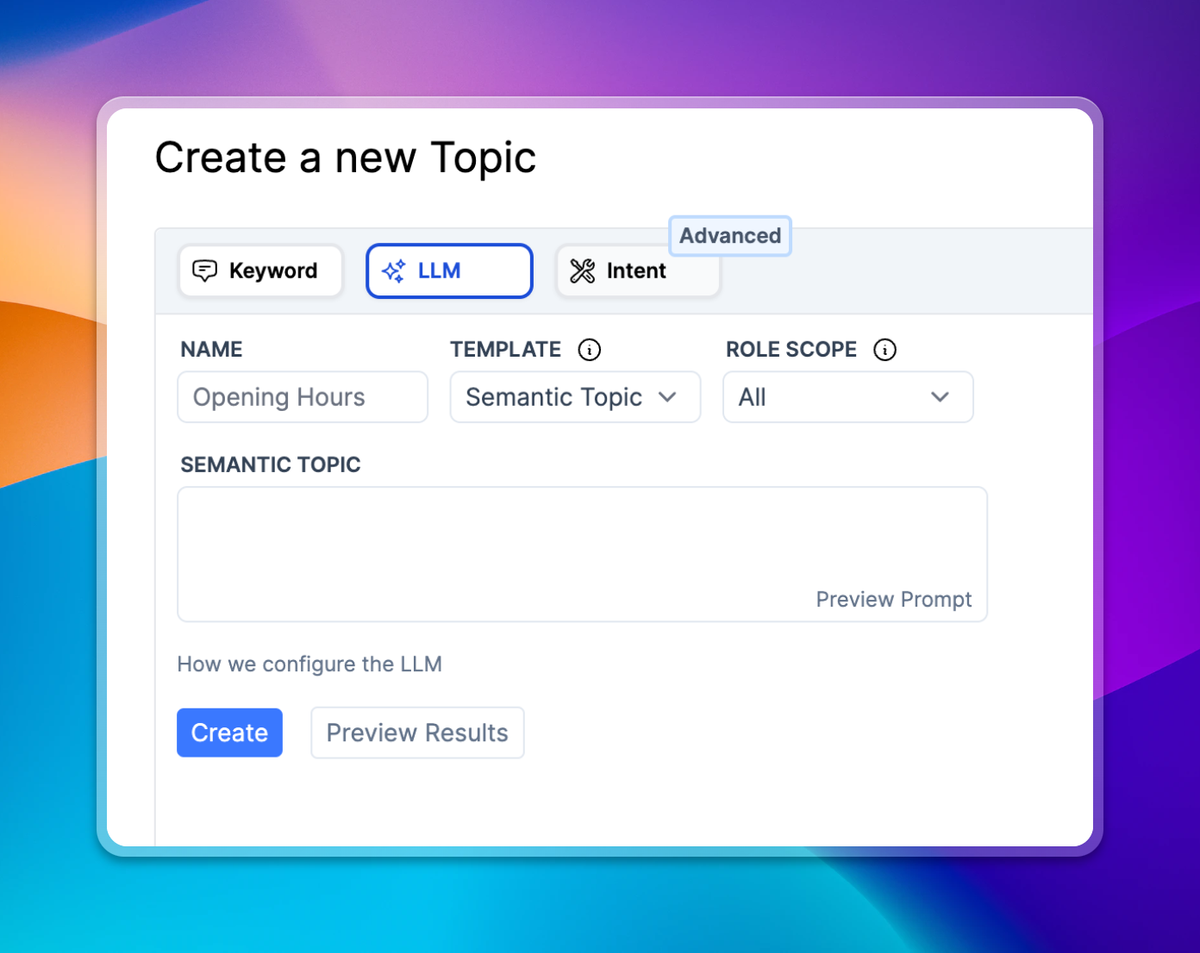

This month we’ve rebuilt transcript categorisation (previously known as topics) to be significantly more reliable and flexible.

We still allow for intent and semantic matching, but our architecture is much more flexible to allow you to run any LLM request over your messages to assign them to categories. Templates are available to make this easy to get started.

Projects

Larger companies often have many LLM products, and these can now be split apart using our new projects feature.

Select new projects in the upper left corner of the application and generate API keys for each. Then send transcripts for each of your LLM products using the API key for that specific project to ensure data from each product is kept separate.