How ThoughtSpot uses product analytics to build great LLM products - an interview with ThoughtSpot VP Eng Jasmeet Jaggi

An overview of how the team at Thoughtspot uses product analytics to guide development. Jasmeet Jaggi, VP Engineering at ThoughtSpot, leads a team building ThoughtSpot Sage, a natural language search driven analytics product.

Today we’re sharing an overview of how ThoughtSpot builds products powered by Large Language Models, and how the team uses product analytics to guide development. This blogpost is the result of an interview with Jasmeet Jaggi, VP Engineering at ThoughtSpot, who leads a team building ThoughtSpot Sage, a natural language search driven analytics product.

What products is ThoughtSpot building using LLMs?

ThoughtSpot provides businesses with natural language search over their business data. Users can ask ThoughtSpot questions like “What were my top performing products in the west region last year?”, “How are my sales growing in the east for jackets?”.

ThoughtSpot uses LLMs to understand natural language questions, with a full technical overview available in the ThoughtSpot Sage docs.

ThoughtSpot also uses LLMs for various other problems like providing users suggested questions to look at, assisting users with data modeling and more.

How does ThoughtSpot understand user behavior and measure product performance in LLM products?

Iteration is key to building any good product. At ThoughtSpot they measure adoption and usage of features and also analyze workflows in product to get high value signals of user behavior. For example, they analyze CTRs on search results, and look at saving, pinning or sharing as high value actions performed on the results.

In the case of Search, building trust with the users is key. ThoughtSpot shows users interpretation of questions in easy to consume token form to add visibility, and users can understand and modify these. Users also provide explicit positive/negative feedback to the system, which is used to improve the quality of search for future iterations. All of these actions are measured and optimized using the product analytics tools outlined below.

.gif)

What tools are in ThoughtSpot’s product analytics tech stack?

All backend systems at ThoughtSpot send metrics to Grafana which is used to Monitor the RED (Rate, Error and Delay) metrics at service level.

ThoughtSpot sends a collection of click stream and custom events to Mixpanel

ThoughtSpot uses Fullstory to capture user workflows to observe how users navigate the product. These are all masked such that no customer sensitive information is sent out.

All product analytics data is fed into ThoughtSpot’s own dogfood environments where product teams analyze data using the ThoughtSpot product. The team has found using the ThoughtSpot product is a big enabler for tracking hard to answer questions.

What challenges has ThoughtSpot encountered building with LLMs?

LLMs are powerful at understanding natural language, but when it comes to analytics ThoughtSpot uses the AI tools they previously built to deliver an even better product. For example, ThoughtSpot built a semantic model and a query generation system that allows query generation for any advanced schemas, like the chasm trap and fan trap, and this allows ThoughtSpot to generate SQL in the correct dialect depending on the target data system. For analytics data, data schemas can be complex. With ThoughtSpot, users are able to generate SQL spanning across multiple tables with complex joins, where LLMs struggle.

To build DSL search ThoughtSpot builds systems like usage based ranking that allow them to find the right set of co-occurring columns, and identify which columns are used more often by certain users. They also built an index of all the data values that are often referred to in natural language questions, and these systems allow them to disambiguate user intent with better accuracy and latency.

Another case where DSL search comes handy is if a user asks “what was my revenue last year?” LLMs may filter data to an arbitrary year, or to the last year of training data. ThoughtSpot found that many keywords that were built for date handling perform much better than direct natural language to SQL, for example “last year” is natively supported in ThoughtSpot language.

When users refer to business vocabulary that LLMs may not be aware of, ThoughtSpot augments that with business synonyms that can be configured ahead of time and that include company-specific vocabulary. There are also training loops in the product which improve the system’s vocabulary.

There are many more problems in the data analytics space where problems ThoughtSpot solved over the last decade have come in handy and allowed them to use LLMs to provide users a great experience with reliability and trust.

What lessons and tips has ThoughtSpot learned on the way?

Find the right tools to solve the right problems.

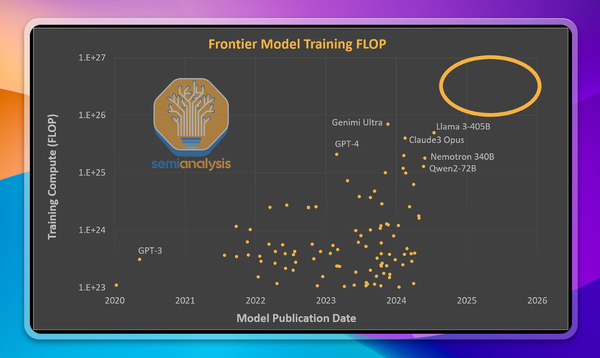

At ThoughtSpot they went through a journey of starting with simple prompts, to prompt engineering, to few-shot examples, to building semantic search systems to improve correctness and reliability, to building their own fine tuned models for specific tasks.

All of these options need different engineering investment, and ThoughtSpot used data and product analytics to guide choices and Engineering investment decisions along the way.

Big thanks to Jasmeet Jaggi at ThoughtSpot for collaborating on this piece. Learn more and get started with ThoughtSpot here. Learn more and get started with Context here.