The Product Side of Prompt Engineering

Prompt engineering isn’t just for engineers. In this piece, we’ll apply a product lens to prompt engineering. We’ll review fundamental prompting techniques and the importance of iterating on prompts according to user feedback.

It’s easy to convince yourself you’re doing prompt engineering just by implementing some fancy new technique. But to actually add rigor to that process, you have to treat prompt engineering as a full-fledged methodology, not simply a laundry list of strategies.

It’s an iterative cycle of understanding user needs, refining prompts to address those needs, and running tests to validate improvements. If that sounds like it overlaps with the scope of a product manager or data scientist, that’s because it does. Prompt engineering is everyone’s responsibility at an AI company—not just software engineers’.

In this post, we’ll introduce the fundamentals of prompt engineering with a product-focused lens. Instead of doing an AI literature review that will inevitably become outdated, we’ll emphasize first principles and the overarching themes that will serve you even as the field advances.

Remind yourself that generative AI isn’t magic

One of the cardinal sins of working with engineers is having outlandish ideas of what technology is capable of. LLMs aren’t magical oracles and no, they can’t cook dinner for you (at least not yet).

To effectively engineer prompts, it’s helpful to have at least a high-level understanding of how LLMs work. We’ll use what’s become a popular, but still apt, analogy: LLMs are a highly sophisticated autocomplete.

They are generative AI systems that have previously been trained on vast amounts of written information (think Wikipedia, Reddit, StackOverflow, etc.). The models use that information to predict the next word in a sequence of words with astonishing accuracy (and a dash of randomness). And it’s not just the single next word; LLMs can predict a series of words to follow some input text.

ChatGPT has developed a reputation for being an all-encompassing virtual assistant. But ultimately, LLMs aren’t innately knowledgeable—they’re just very good at producing sequences of words based on patterns from the body of human knowledge. We’ll come back to this shortly when we discuss prompt engineering best practices.

The anatomy of a prompt

Let’s go over the basics of prompting and touch on the most common techniques that are the building blocks for more complex prompt engineering.

Officially, there are virtually no requirements for how you prompt an LLM. Whatever you provide, the LLM will do its best to continue the text per relevant patterns from its training data. Take the following example we tested in OpenAI’s playground:

User: Now, this is the story all about how

Assistant: My life got flipped turned upside down

AI coming up with Fresh Prince lyrics is a neat party trick, but such unstructured LLM prompts leave a lot to chance.

Production LLM applications have to be more reliable than that. Good prompts include some, if not all, of the following:

- A clear and direct instruction or question

- The relevant context to perform the task or answer the question

- Examples that demonstrate how to reason about the problem

- Explicit directions for how to format the output

A clear directive (and if applicable, the relevant context) is the bare minimum for prompting an LLM. On its own, that would be called zero-shot prompting. Since LLMs are trained on so much information, zero-shot prompting is often an effective starting point for simpler tasks:

User: Translate the following from English to Latin American Spanish

English: The car is red

Spanish:

Assistant: El carro es rojo

Not too shabby. The model even used the right translation for the word car as opposed to the European Spanish translation. Notice just how specific and carefully formatted the prompt is. Zero-shot prompting doesn’t mean zero-effort prompting—you still need to be thoughtful about how you ask a question or present a task to get the best results.

For more complex tasks, it’s helpful to give the model examples in what’s called few-shot prompting. This is a form of in-context learning where you give the model further training data just before asking it to attempt a specialized task. For example, say you’re using an LLM to bucket your app’s users into personas:

User: Classify the following users as Super Fans, Average Adopters, or Potential Churn

User 1: Active 28 days out of the last 30. Average session length of 20 mins. Rated app 5 out of 5. // Super Fan

User 2: Active 24 days out of the last 30. Average session length of 7 mins. Rated app 4 out of 5. // Average Adopter

User 3: Active 6 days out of the last 30. Average session length of 3 mins. Did not rate app. // Potential Churn

User 4: Active 2 days out of the last 30. Average session length of 5 mins. Rated app 3 out of 5. //

Assistant: Potential Churn

The LLM was able to match the fourth user’s information against the previous three examples to make an accurate classification. Without those few-shot examples, the LLM would struggle, especially if your personas’ names were not self-explanatory.

The previous example illustrates another point about interacting with LLMs: how to get your output in the desired format. Few-shot prompting can implicitly show an LLM how to structure its response such that it's convenient to pass along to other software systems.

Alternatively, you can include explicit directions. This could be as simple as asking for a standard format, such as JSON, HTML, or even a haiku. Better yet, you could provide a template and ask the model to conform to that schema:

User: What are the five largest countries by land area?

Desired format: <rank>. <country name> (<two-letter abbreviation>)

Assistant: 1. Russia (RU)

2. Canada (CA)

3. China (CN)

4. United States (US)

5. Brazil (BR)

You can achieve a lot with LLMs just by applying these fundamentals. Prompting can definitely get more complicated (and convoluted), but a strong grasp of these foundational concepts is table stakes for deploying LLMs in production.

Now that we’re on the same page about what makes up a prompt, how do you actually engineer your prompts to make your product better?

The prompt engineering cycle

Prompt engineering is a means to an end. The end goal? A better LLM product experience for users. Keep this in mind as we go through the prompt engineering cycle.

Articulate the user need

Often, the challenge lies in articulating what a “better experience” actually is. Answering that product question is a prerequisite for the rest of the prompt engineering work that follows.

There’s no way we can answer that question for you without additional context. That said, common dimensions along which you might want to improve your LLM product include:

- Accuracy: Is your model hallucinating and making false claims?

- Answer quality: Do answers capture the full nuance of a question and include the right level of detail?

- Tone/style: Is the length, word choice, and organization of your model’s responses appropriate?

- Bias/toxicity: Is the model at risk of misbehaving in a way that could misinform, offend, or even harm users?

- Privacy/security: Does the model follow company policy around PII? And is the model robust to vulnerabilities like prompt injection attacks?

- Latency: While typically not a focus area for prompt engineering, there are proposed techniques for giving users faster responses.

In addition to all of these, think about the top-level metrics and user journeys you’re optimizing for. For an online store this might be revenue, while an information retrieval app might prioritize daily active users. At the end of the day, prompt engineering is in pursuit of these overall business goals.

You may have a hunch as to which objectives need the most attention, but operating on gut feel alone is a gamble. Rather, let user feedback and data be your guide. For LLM applications, you should be monitoring both what your users are saying to your models and what your models are saying back. By tracking all of the dimensions listed above, you’ll be able to confidently commit prompt engineering resources into the right efforts.

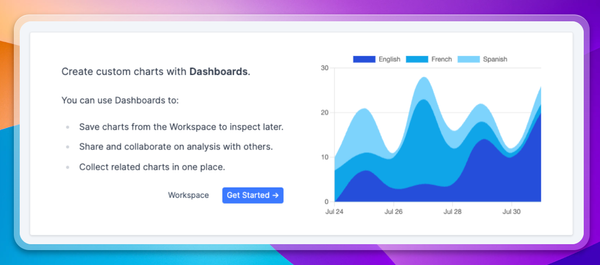

It’s not good enough to just look at metrics in the aggregate. Users will all interact with your AI models in different ways, and averages distort this reality. Ideally, you’ll be able to inspect transcripts of user-AI interactions to get an authentic sense of where there’s room for improvement. You won’t have time to do this at scale, so the middle ground is to look at slices of your overall dashboards. It might be that your product is consistently failing for specific use cases or discussion topics.

A typical workflow might involve starting with your aggregate dashboards, then zooming in slices of interest, and finally reviewing individual transcripts from those slices. Your goal is to identify recurring errors in your LLM’s outputs that you might be able to correct with prompt iteration.

Refine your prompts to match the user need

So you’ve pinpointed the core issue and hopefully you have a vision for what success looks like. Now, you can focus on tweaking your prompt to meet that bar.

Start with the basics and make sure you’re not making any obvious mistakes like not requesting model outputs in the right format. When it comes to giving specific instructions, use your knowledge about the product and use case to guarantee that the model does exactly what you want it to do. For instance, if you’re building a weather app for vacation planning, don’t just vaguely ask an LLM for a destination’s climate. Be precise about asking for precipitation, hours of daylight, or whatever else you intend on surfacing to your end user.

Beyond that, consider updating underperforming prompts from zero-shot to few-shot. This is where a product perspective can be especially important. Few-shot examples are your chance to tell the model exactly how it should behave.

The quality of examples you choose is critical. Instead of inventing fake scenarios, browse your user data for actual user inputs and formulate how you would have wanted the AI to have responded. By supplying examples that used to stump the LLM, you can be confident that you’re providing valuable training data that the model can learn from.

It’s worth introducing another common prompting technique: chain-of-thought (CoT) prompting. The idea is to ask an LLM to not just produce an answer but to explain its reasoning in arriving at that answer.

User: Alice was double Bob's age five years ago. Alice is 31 now. How old is Bob? Do not show any work.

Assistant: 26

User: Alice was double Bob's age five years ago. Alice is 31 now. How old is Bob? Show your work.

Assistant: Let's start by finding out how old Alice was five years ago. We can do this by subtracting 5 from her current age: 31 - 5 = 26.

According to the information given, Alice was double Bob's age five years ago. So, let's find out how old Bob was five years ago. We can do this by dividing Alice's age five years ago by 2: 26/2 = 13.

Now, we need to find out how old Bob is now. Since five years have passed, we can add 5 to Bob's age five years ago: 13 + 5 = 18.

Therefore, Bob is 18 years old.

For use cases like automated AI agents, where the LLM may perform many actions on its own given a single goal from the user, CoT helps a model stay on track when it otherwise might go off the rails.

Chain-of-thought prompting pairs particularly well with few-shot prompting. For complex reasoning tasks, don’t just give sample questions and answers. Show the LLM the steps in between and it can do a better job extrapolating to new queries.

Not all of your refinements will be targeting output accuracy. If you’re trying to improve the tone or style of your model outputs, try being very prescriptive about the LLM’s role. For example, for a content summarization use case, you could tell the LLM to act like a subject matter expert. Or maybe that’s too jargony for your end users and it’s better to ask the LLM to behave like a journalist. Like much of what we’ve discussed, it’s principally a product decision.

Validate your improvements on actual users

At a certain point, you’ll have a good feeling about your new and improved prompts—that’s not a reason to skip the last step of the prompt engineering cycle though. You still have to validate that the changes are a net positive for your entire user base.

You can partially do this offline, that is, outside of your live product environment. It’s as simple as testing your model on a range of user inputs, not just the ones you personally can come up with. Refer to transcripts of past user-AI interactions for real samples of how to test your LLM. You can even have a separate LLM generate additional test cases based on these samples.

Eventually, you’ll need to test your model in production. You can start this as an A/B experiment and gradually scale up the percentage of your users who are exposed to the new model. Throughout, you should monitor your dashboards and core metrics.

Because of how heterogeneous your users’ experiences might be, it’s conceivable that what you thought was an improved prompt actually makes the product worse for some users. These cases are a matter of repeating the engineering cycle—figure out the pattern of how and why the product is underperforming and tailor your prompts accordingly.

Don’t expect prompt engineering to solve all of your problems either. Limitations on how much text a model can ingest (the context window) mean that prompts can only go so far. You may find that techniques like retrieval augment generation (RAG) or fine-tuning your base LLM are more useful for some product problems.

Context.ai’s product analytics enable systematic prompt engineering

If there’s one thing to takeaway about prompt engineering, it’s that it’s as much about the product rationale for a change as it is about the actual mechanics of writing prompts. To make prompt engineering worthwhile, you have to focus your energy on the most meaningful use cases where the product is currently falling short. Then, you have to measure that new prompts work as intended and solve the problem for your user base as a whole.

Context.ai is the analytics platform for LLM applications, giving you the requisite insights to engineer impactful prompts. You can monitor user behavior, track AI performance, and slice and dice your data to see key segments or even specific transcripts. To learn more about using Context.ai for your AI application, schedule a demo today.