Product Update | December 2023

New evals features include test set run comparisons, custom global LLM evaluators, per-query evaluators, creation of eval cases from production transcripts, a new evals onboarding, creation of test cases in the UI, and many UX improvements.

December has been all about evals at Context.ai! New evals features include test set run comparisons, custom global LLM evaluators, per-query evaluators, creation of eval cases from production transcripts, a new evals onboarding, creation of test cases in the UI, and many UX improvements.

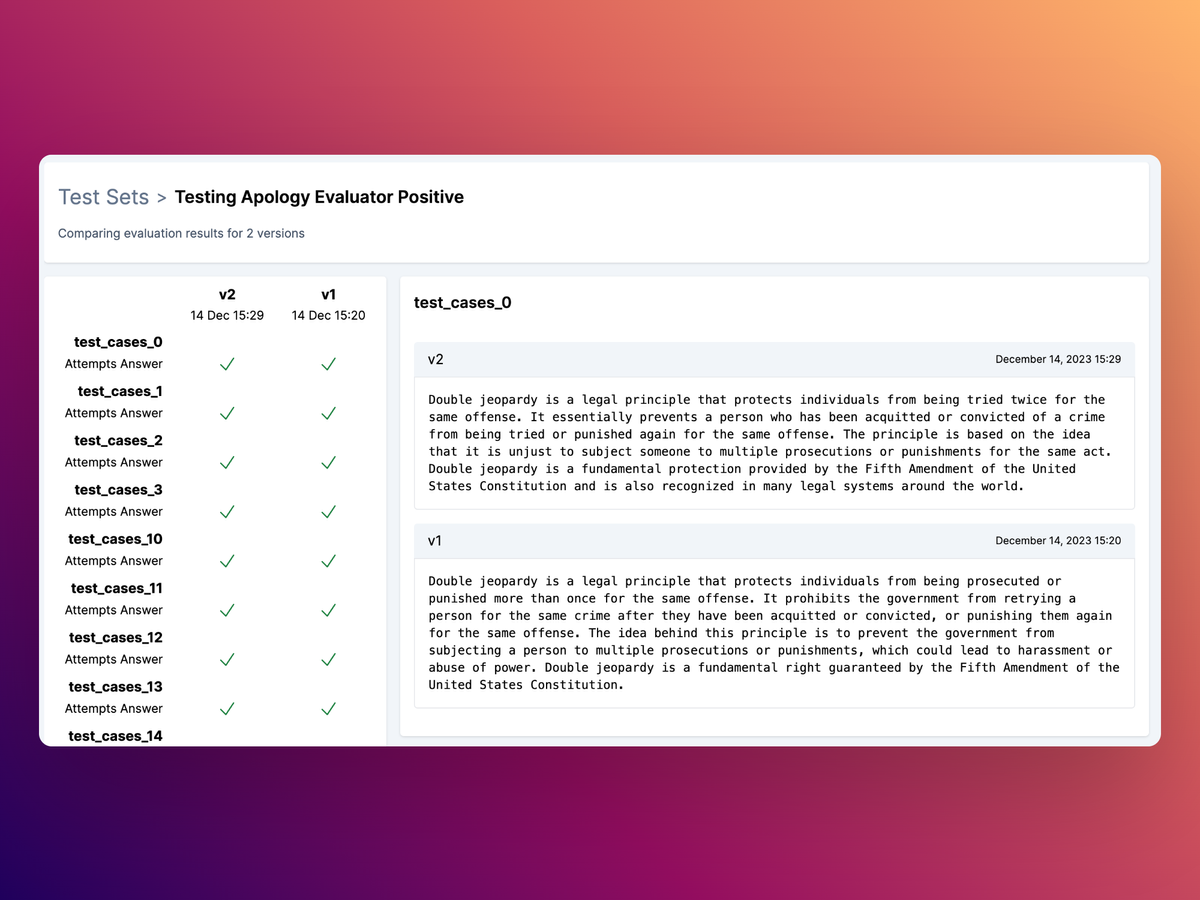

Test Set Run Comparisons

Completed Test Set runs can now be directly compared to one another from the Test Set Version table. Multi-select any number of Test Set Versions and click compare, and you’ll see the of the executed Test Sets compared to one another, with the evaluator results and the generated responses for each.

Custom Global LLM Evaluators

When we launched evals we only supported a number of default global evaluators that can run over every query, assessing common problems such as hallucination and response refusal. Users can now define additional custom global LLM evaluators from the Evaluators page by selecting Create New Evaluator, and adding an LLM prompt.

Per Query Evaluators

Evaluators can now be enabled at the Test Case level in addition to the Test Set level. This allows you to define evaluators that should only run over one or a subset of the Test Cases within your Test Set, giving you more granular control of pass criteria for your evaluators.

Create Test Cases From User Transcripts

Defining Test Cases is often time-consuming and frustrating, so you can now use production transcripts to make this much quicker and easier! Users with real user transcripts in their analytics can now copy the queries into new Test Cases from any point in the conversation.

Create New Test Cases

New Test Cases can now be created in the UI in addition to the API, allowing you to easily define additional tests within the product.

Fork Test Set Versions

Existing Test Sets can now be forked in the UI to create a new version that can be modified in the UI. This allows you to vary system prompts or queries to assess the performance impact of changes you make.

New Onboarding

Onboarding to evals no longer requires the ingestion of analytics transcripts - anyone can get started with evals before using our analytics products.

Hiring!

We’re hiring! We’re looking for a Founding GTM Lead to join our team in London. This is a generalist position and will be our first non-engineering hire. We’re looking for someone with experience in GTM at early-stage startups and for technical B2B products. More information here.