Context.ai is now integrated with Haystack 2.0!

Context.ai now provides developers using Haystack with product analytics via the new Context.ai Haystack integration. This integration allows the easy export of transcripts from Haystack 2.0 to Context.ai for analysis. Analytics are then visible in Context.ai, allowing developers to monitor the performance of their LLM product with real users, as well as to understand how people are using the application. This enables developers to measure and improve the performance of their application over time, as well as to understand how and why users are engaging with their product.

Haystack is a leading open source framework for building production-ready LLM applications. With highly customizable pipelines and a big ecosystem of integrations, you can easily design the exact application that you need for your use case and bring it reliably to production. You can learn more and get started at haystack.deepset.ai

How does the integration work?

This launch adds a ContextAIAnalytics component that accepts lists of messages from Haystack and logs them to Context.ai using the Context.ai Thread Conversation Ingestion API

Use an instance of the ContextAIAnalytics component at each stage of your pipeline where you wish to log a message. In the example below the output of the prompt_builder and the llm components are captured.

When running your pipeline you must include thread_id in the parameters where each unique thread_id identifies a conversation. You can optionally include metadata with user_id and model reserved for special analytics.

import uuid

import os

from haystack.components.generators.chat import OpenAIChatGenerator

from haystack.components.builders import DynamicChatPromptBuilder

from haystack import Pipeline

from haystack.dataclasses import ChatMessage

from context_haystack.context import ContextAIAnalytics

model = "gpt-3.5-turbo"

os.environ["GETCONTEXT_TOKEN"] = "GETCONTEXT_TOKEN"

os.environ["OPENAI_API_KEY"] = "OPENAI_API_KEY"

prompt_builder = DynamicChatPromptBuilder()

llm = OpenAIChatGenerator(model=model)

prompt_analytics = ContextAIAnalytics()

assistant_analytics = ContextAIAnalytics()

pipe = Pipeline()

pipe.add_component("prompt_builder", prompt_builder)

pipe.add_component("llm", llm)

pipe.add_component("prompt_analytics", prompt_analytics)

pipe.add_component("assistant_analytics", assistant_analytics)

pipe.connect("prompt_builder.prompt", "llm.messages")

pipe.connect("prompt_builder.prompt", "prompt_analytics")

pipe.connect("llm.replies", "assistant_analytics")

# thread_id is unique to each conversation

context_parameters = {"thread_id": uuid.uuid4(), "metadata": {"model": model, "user_id": "1234"}}

location = "Berlin"

messages = [ChatMessage.from_system("Always respond in German even if some input data is in other languages."),

ChatMessage.from_user("Tell me about {{location}}")]

response = pipe.run(

data={

"prompt_builder": {"template_variables":{"location": location}, "prompt_source": messages},

"prompt_analytics": context_parameters,

"assistant_analytics": context_parameters,

}

)

print(response)

What can you do with the logged data?

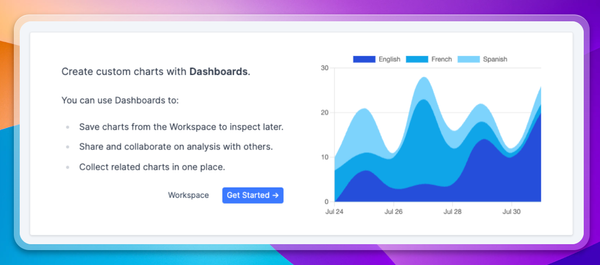

Context.ai provides product analytics using the data logged from Haystack, enabling users to answer questions such as:

- What are people using my application for, and what are the most frequent use cases?

- How well are user needs being met by my application?

- Where are user expectations not being met?

To answer these questions, Context.ai detects the topics of transcripts and assigns them labels. This allows builders segment user interactions by use case, and to understand which use cases drive the most user engagement.

Context.ai then monitors the performance of the application, looking at user feedback signals such as thumbs up/down ratings, free text feedback, and user input sentiment. This allows builders to track the global performance of their application, but also the per-use case performance, so you can identify the use cases of your product that are performing well, and other use cases that have room for improvement.

Learn more about Context.ai in this overview video